Regulatory frameworks for Artificial Intelligence (AI) in the pharmaceutical and medical device industries are undergoing significant changes to meet the challenges and opportunities posed by this technology.

The use of AI has made rapid progress and has already been beneficial to the healthcare industry and patients. However, these applications hold much more potential for future improvements.

To ensure the safe, ethical implementation and usage of AI, governments are creating a comprehensive legal framework that strikes a balance between the potential risks and benefits.

As a result, to facilitate the development and use of responsible and beneficial AI, the European Medicines Agency (EMA) developed a work plan for 2023-2028 on artificial intelligence in medicines regulation.

The European Parliament and Council have also recently agreed on the first law regulating artificial intelligence in the world: the EU Artificial Intelligence Act.

The International Coalition of Medicines Regulatory Authorities (ICMRA) and the World Health Organization (WHO) have also made other recommendations and considerations.

With a particular focus on healthcare and medical devices, these developments reflect a growing recognition that clear regulatory frameworks are necessary to ensure the safe and ethical use of AI.

AI in Pharma

When we talk about AI in pharma, we typically refer to a technique, a model, or an algorithm integrated into computer systems that enables AI to learn and reason with data, so that it can perform automated tasks, and make decisions and predictions without explicit programming of every step by a human.

This ability is behind phenomena such as Machine Learning (ML), Natural Language Processing (NLP), computer vision, conversational intelligence, and neural networks.

While ML refers to machines learning from data and improving their performance over time, NLP refers to the ability of computers to understand text and spoken words in the same way that humans can.

As a result of Deep Learning (DL), a subset of ML, AI systems are capable of identifying patterns, predicting outcomes, and adapting their behavior based on changing conditions.

With advancements in infrastructure like the cloud, as well as hardware and software technologies, DL models, and the growth of big data, more information is available than ever before in the life sciences. With its advanced algorithms, AI is rapidly revolutionizing the healthcare industry.

Every single regulatory agency is increasingly recognizing the pivotal role that AI is playing in the life sciences. It is revolutionizing every aspect of public health, clinical practice, research, drug development, disease surveillance, and the entire industry management. AI drives innovation and enhances patient outcomes.

Despite its praise, AI in pharma has also been controversial regarding its bias, privacy, and safety.

In a study titled Artificial intelligence in healthcare, the Scientific Foresight Unit ('STOA') of the European Parliamentary Research Service analyzed its applications, risks and opportunities, and ethical and social impacts.

The study highlighted that AI in pharma can be used to automate repetitive tasks and help doctors to diagnose and treat illnesses. But AI also has risks: AI errors, misuse of biomedical AI tools, AI biases, lack of AI transparency, data privacy and security issues, gaps in AI accountability, and obstacles in AI implementation.

For example, biometrics and facial recognition technologies require complex AI algorithms to handle the vast amounts of information they require. This technology has raised concerns about privacy and fundamental human rights. There are many ethical questions to consider.

The design, development, and deployment of AI technologies for health must consider ethical considerations and human rights, too.

As a result, it is essential to develop regulatory oversight mechanisms to ensure private organizations are accountable and responsive to those who may benefit from AI products and services, as well as to ensure transparency.

Potential AI applications in the life sciences industry

AI is revolutionizing the life sciences industry in a variety of ways, from drug discovery and development to personalized medicine and disease diagnosis.

According to a survey made by Definitive Healthcare in 2023 to 86 leaders of biopharma and medical device companies, “the global AI in the life sciences market is projected to reach $7.09 billion by 2028, growing at a compound annual growth rate (CAGR) of 25.23% — half of the 50 largest pharmaceutical companies have entered into partnership or licensing agreements with AI companies”. This highlights the significant interest and investment in AI in the life sciences industry.

AI has the potential to improve efficiency, speed, accuracy, and patient outcomes. The life sciences industry has several potential applications for AI (EY Switzerland, 2023):

- Drug discovery

- Personalized medicine

- Medical imaging

- Clinical trial design

- Medical writing

- Demand forecasting

- Smart advertising

- Intelligent supplier sourcing

- Manufacturing optimization

- Support for SOPs

Current situation and regulations

AI regulations and policies are still under development. Let's take a look at some of the main milestones that have been achieved.

Worldwide

In May 2019, the Organisation for Economic Co-Operation and Development (OECD), adopted the OECD Principles for AI (the Recommendation). As part of the document's objectives, it aims to promote innovation and trust in AI through responsible stewardship of trustworthy AI and respecting human rights and democratic values.

In June 2021, WHO published a guidance on the Ethics and Governance of Artificial Intelligence for health. It outlines six consensus principles to ensure AI works to the benefit of humankind and identifies the ethical challenges and risks. This guidance introduced initial knowledge to policymakers, AI developers and designers, and healthcare providers who were involved in designing, developing, using, and regulating AI technology for health.

In October 2023, WHO published a new publication on Regulatory considerations on artificial intelligence for health. The publication explores regulatory and health technology assessment concepts and good practices for the development and use of AI in health care and therapeutic development.

There are other developments in Europe and the US. These developments have the potential to shape the future of AI use in the medicines lifecycle.

EMA in Europe

The EMA is ensuring that they stay up to date with AI advances to remain at the forefront of the industry.

- Here are the key moments in Europe:

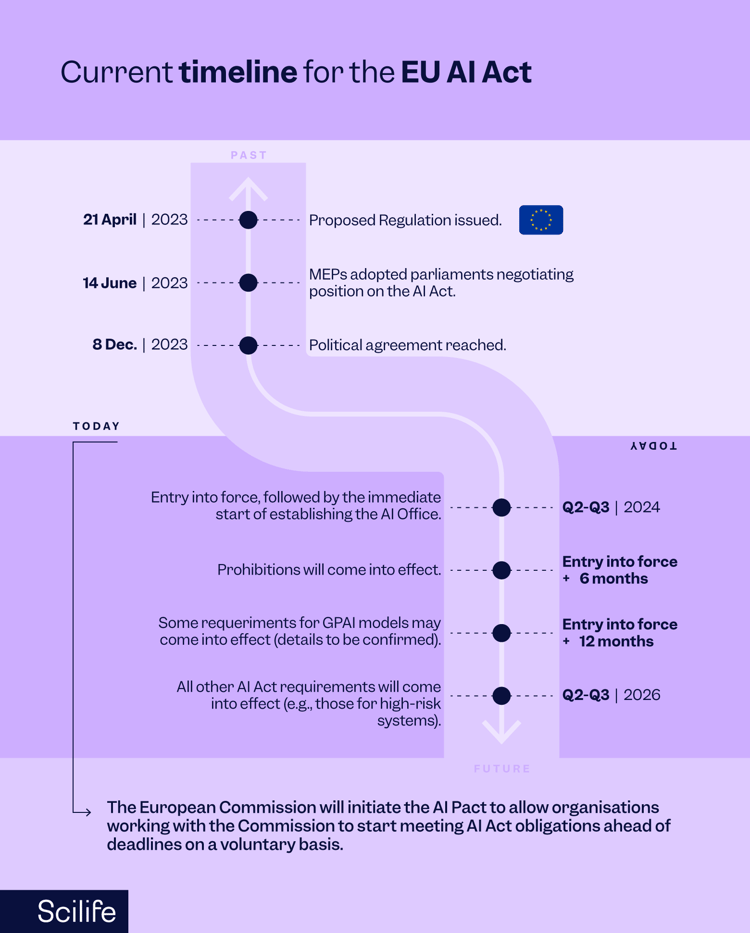

In April 2021, the EU published the first proposal for the Artificial Intelligence Act ("EU AI Act"). It was the first regulation with harmonized rules on AI.

In July 2023, as part of the Big Data Workplan 2022-2025, the Big Data Steering Group of the European Medicines Agency (EMA) published a draft reflection paper on the use of AI in the medicinal product lifecycle that outlined current thinking on how artificial intelligence can help develop, regulate, and use safe and effective medicines for humans and animals. Through this paper, the EMA was opening a dialogue with developers, academics, and other regulators, ensuring that patients and animals could benefit from these innovations. According to the EMA, the use of AI should always be in compliance with existing law, consider ethics, and respect fundamental rights. (EMA, 2023).

In December 2023, the public consultation on the AI reflection paper (RP) ended, and the process of developing guidance began, including whether multiple guidelines were needed.

In December 2023, the EMA and the Heads of Medicines Agencies (HMAs) published an AI workplan 2023-2028 to create a regulatory system utilizing the capabilities of AI to maximize the benefits of AI while ensuring that uncertainty is adequately explored, and risks are mitigated. The workplan states that comments received on the EMA's AI reflection paper will guide the development and evaluation of AI uses in the medicines' lifecycle.

Lastly, in December 2023, the European Parliament and Council reached a political agreement on the European Union's Artificial Intelligence Act ("EU AI Act"), the world's first comprehensive legal framework for the regulation of AI. To promote trust and ensure that AI systems are "safe" and "respect fundamental rights and EU values", the Act regulates AI systems across the EU and encourages AI investment. After the consolidated text is finalized, most of the EU AI Act's provisions will apply two years after it enters into force. See the Parliament’s press release.

Source: Downloaded whitepaper

Let’s now have a look at AI’s development work in the US.

FDA in the US

FDA is committed to ensuring that drugs are safe and effective while facilitating innovations in their development. No specific regulatory guidance is applicable yet. However, the FDA has invited the public to comment on the discussion papers that have already been published. They are also engaging with stakeholders to better understand the implications of AI-based technologies on the development of drugs and medical devices. The agency plans to finalize its regulatory framework in the near future.

- Let’s discuss what is the current situation:

In January 2019, the FDA published the Developing a software pre-certification program: A working model that provides a more streamlined and efficient regulatory oversight of software-based medical devices from manufacturers who have demonstrated a robust culture of quality and excellence.

In April 2019, the FDA published a discussion paper “Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) - Discussion Paper and Request for Feedback” that describes the FDA’s approach to premarket review for artificial intelligence and machine learning-driven software modifications.

In January 2021, the FDA published the Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan, with the FDA’s commitment to support innovative work in the regulation of medical device software and other technologies, such as AI/ML. In this plan, the FDA outlines five goals:

- Tailored regulatory framework for AI/ML-based SaMD

- Good Machine Learning Practice (GMLP)

- A patient-centered approach incorporating transparency for users

- Regulatory science methods related to algorithm bias & robustness

- Real-World Performance (RWP)

The FDA released two discussion papers on AI use in 2023. It is expected that the FDA will consider such input when developing future regulations.

- The first paper, Artificial Intelligence and Machine Learning in the Development of Drug & Biological Products aims to stimulate discussion between interested parties such as pharmaceutical companies, ethicists, academia, patients, and global regulatory and other authorities, on the potential use of AI/ML in drug and biological development, and the development of medical devices to use with these treatments. The article also discusses ways to address possible concerns and risks associated with AI/ML.

- The second paper Artificial Intelligence in Drug Manufacturing from the FDA seeks public feedback on regulatory requirements applicable to the approval of drugs manufactured using AI technologies.

Artificial Intelligence Workplan

In December 2023, the EMA and the HMAs developed a multi-annual AI workplan 2023-2028 in the European medicines regulatory network (EMRN).

The plan is being developed to promote the use of AI in the pharmaceutical and medical device industry responsibly. Among the initiatives are implementing and monitoring AI for internal regulatory purposes, enhancing network-wide analytics capability, and collaborating with international and EU agencies.

To ensure the safety of patients, the EU is committed to regulating AI effectively and responsibly, emphasizing stakeholder communication, research priorities, and guiding principles.

It indicates the EU's commitment to upholding the ethical and responsible use of AI in the life sciences sector.

Through 2028, this workplan will govern AI activities by addressing four key dimensions:

- Guidance, policy, and product support:

- By Q3 2024, work will be initiated in preparation for the AI Act coming into force.

- By Q4 2024, an AI observatory will be created to monitor the impact of AI and the emergence of novel systems and approaches.

- Tools & technologies:

- By Q1 2024, regulators expect to roll out knowledge mining tools for the LMRN network.

- By Q2 2024, a phased roll-out and monitoring of large language models (LLMS) and related chatbots will be released.

- In Q3 2024, regulators also expect to complete a survey on the network's capability to analyze data using AI.

- By Q4 2024, a Network tools policy will be published for open and collaborative AI development.

- Collaboration and change management:

- Regulators expect to work with other partners and stakeholders: EU Agencies, international partners, AI Virtual Community, partners experts on AI, medical devices, and academia.

- Regulators expect to work with other partners and stakeholders: EU Agencies, international partners, AI Virtual Community, partners experts on AI, medical devices, and academia.

- Experimentation:

- In Q1 2024, experimentation cycles will begin.

- From Q2 2024 until 2026, there are plans to develop internal guiding principles for responsible AI for internal use, and to issue technical deep dives in such areas as digital twins.

- By Q2 2025, a roadmap of network research priorities will be published.

- By Q2 2027, the research priorities will be revised.

The future of AI in the life sciences: what to expect

In the near future, we can expect to see more guidance and regulations being published on the use of AI.

AI must be regulated effectively for its ethical and responsible use to ensure patient safety.

The rapid deployment of AI also presents significant challenges, including the need to address issues related to the understanding of complex AI technologies, ethical and responsible use of AI, and the potential lack of transparency in AI decision-making processes.

For AI to succeed and provide benefits to society, governments, organizations, and experts must work together in collaboration.

Experts recommend taking a holistic approach, addressing issues such as intended use, continuous learning, human interventions, and training models.

In addition, the community at large should work towards shared understanding and mutual learning.

Final thoughts

The integration of artificial intelligence (AI) holds both promise and challenges in the life sciences. As regulatory frameworks evolve and industry leaders navigate ethical issues, a transformative journey is taking place.

The key takeaways are:

- AI regulation in life sciences is advancing rapidly, driven by global efforts to ensure safe and ethical implementation.

- Despite its potential to revolutionize healthcare, AI raises concerns such as bias and privacy, emphasizing the need for ethical considerations and transparency.

Industry leaders are investing in AI partnerships, highlighting its significant growth potential in enhancing efficiency and patient outcomes. - Collaboration between regulatory agencies, industry stakeholders, and experts is crucial for shaping future AI policies and guidelines.

- By regulating AI effectively and collaborating with other stakeholders, we can ensure that patient safety is upheld and ethical standards are maintained.

Discover how to surf the wave of AI innovation with Scilife smart QMS.