Natural Language Processing (NLP) is on a significant growth trajectory—and the Life Sciences are increasingly relying on this groundbreaking technology. A subfield of linguistics, computer science, and artificial intelligence (AI), NLP involves the interactions between computers and human language. Specifically, it consists of programming computers to process large amounts of natural language data.

The result is a computer being able to “understand” or interpret the contents of documents, including the contextual nuances of the language therein. The technology can then extract information and insights from the content, and subsequently categorize and organize them.

Introduction, content moderation, and NLP use cases in the Life Sciences

There are licensed, open-source, and Software-as-a-Service (SaaS) NLP models today.

| Open Source | Commercial Library | SaaS | |

| Time to Market | Fast | Fast | Fastest |

| Compliance | Safe | Safe | Share Your Data |

| Upfront Cost | Free | Pay by License | Pay by Volume |

| Ongoing Cost | Retrain & Maintain | Cheaper at Scale | Expensive at Scale |

| Trainable? | Yes | Yes | No |

In 2020, experts launched the Multilingual Models & Translation: the first AI model that could translate 100 languages without relying on English. M2M-100 is trained on 2,200 language directions—or 10x more than earlier English-centric multilingual models. The solution was also more scalable and more customizable.

Other examples of NLP include DALL-E, a popular platform that creates AI-generated images based on text information. GitHub, meanwhile, is working on a new pilot in which AI can develop code automatically.

Then, we have open-source NLP models such as PubmedQA in the Life Sciences. This model allows users to ask medical questions and wait for the system to provide the best answers. The system uses NLP to examine the structure of these questions, classify them, and source the correct answer.

Examples of PubmedQA questions:

- Do spontaneous electrocardiogram alterations predict ventricular fibrillation in Brugada syndrome?

- Do liver grafts from selected older donors have significantly more ischaemia reperfusion injury?

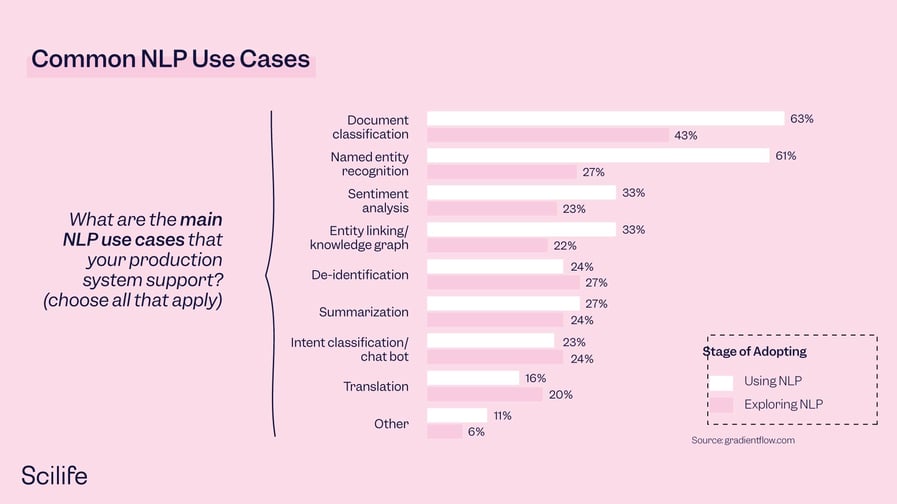

This brings us to the most common NLP use cases, which include:

- Document classification, or text categorization. This is the task of assigning a document to one or more categories.

- Online content moderation, or deciding whether an online product review is valid by making several document classification decisions.

- Toxic content detection, or automatically detecting hate, obscene language, or threats on social media.

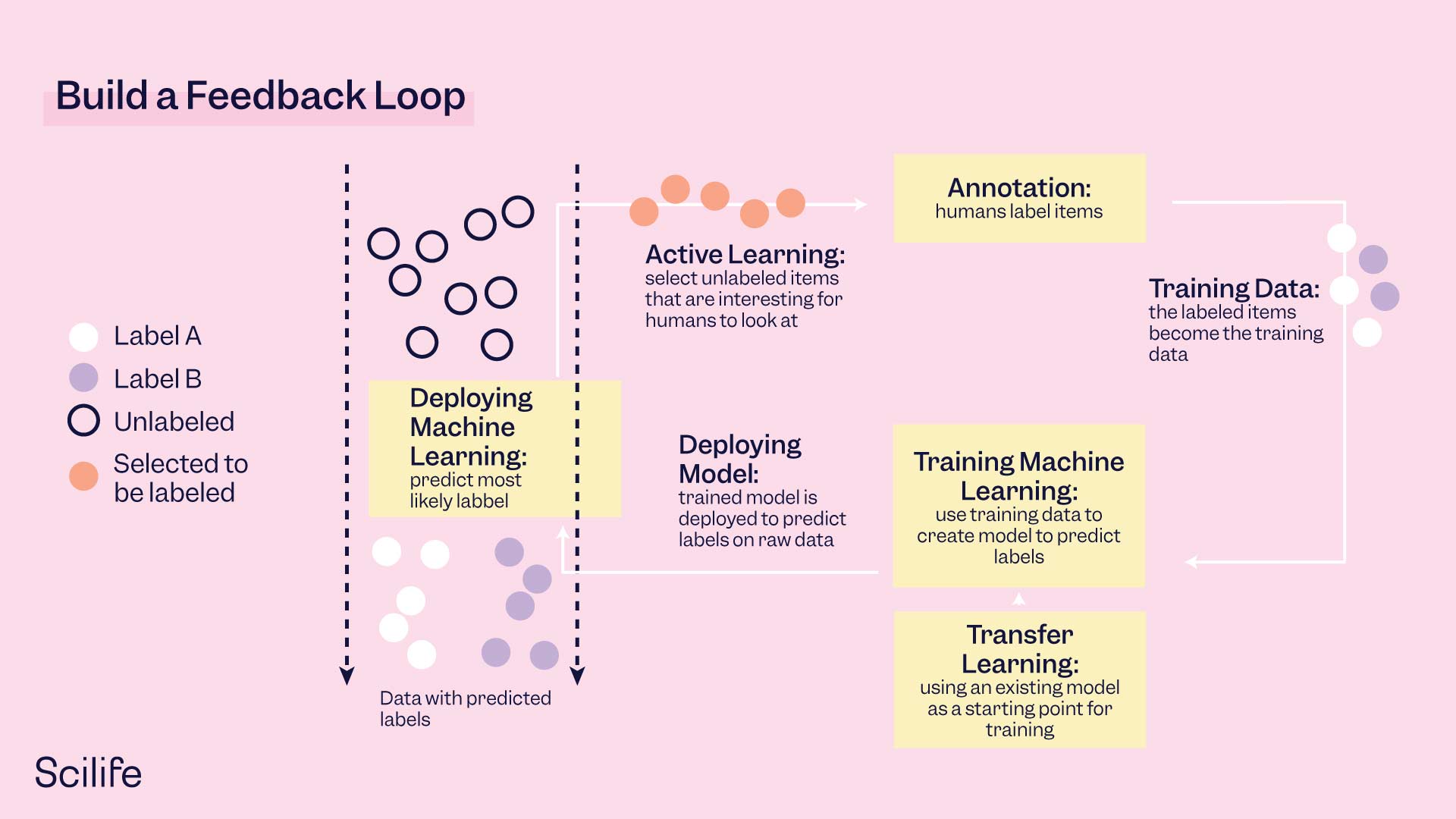

When considering an NLP solution, organizations should consider factors such as accuracy, production readiness, speed, fit with existing software stack, and scalability. It’s important to note that AI models are imperfect. If your product assumes they are always accurate, then that is a design error. Humans should leverage a feedback loop to monitor and improve their systems.

While there are great open-source, pre-trained models and code in existence for popular NLP problems, and models can be tuned to your exact requirements, they must be updated on a regular basis. For optimal results, be sure to keep your human personnel in the loop throughout.

Creating, updating, and querying a biomedical knowledge graph

In Pharma, there is a great deal of unstructured data—around 80% of all data, to be precise. Correspondingly, in the Life Sciences, experts have found that based on the amount of new research available each day, physicians would need to read clinical studies for 29 hours per day in order to keep up with the latest advances. This is impossible.

Yet the biomedical knowledge graph can help. This graph represents the relationships between entities and helps to consolidate data. Rather than reading all day, physicians can turn to NLP and interpret information directly from the knowledge graph.

How to create a knowledge graph?

- Data collection and ingestion: This step combines public datasets to make the data structured and accessible.

- Inference: The NLP model can infer as needed (and identify all the entities and their relationships—making unstructured data structured when relevant).

- Graph thinking connection: Here the model joins disparate facts into a connected graph, leveraging advanced graph algorithms like link prediction, node classification, and more.

- Customized analytics/tool discovery: The model can also cater to various profiles in the organization, allow for the customization of insights, and learn about the underlying domain.

Biomedical knowledge graphs are truly critical to representing biomedical concepts and relationships.

Migration of clinical trial documents to the trial master file (TMF)

TMF migration is essential. This is because the TMF contains trial documentation in line with Good Clinical Practice.

Accordingly, when acquiring a new company, drug, or IT system, the TMF needs to be migrated to the company standard. Note that there is no one-to-one mapping between standards, and that 10s or 100,000s of documents may need to be classified (and their meta information extracted).

Now, a traditional document migration process is slow and inconsistent. AI-assisted migration tools, however, are faster and smarter.

This is because AI-enabled migration tools can:

- Extract information based on the training data.

- Classify documents and extract the most relevant information.

- Leverage optical character recognition (OCR) capabilities, plus handwritten detection and recognition.

Language detection, and both instant and batch mode, are additional features and benefits of AI-assisted migration tools. AI-assisted TMF migration features extensive time and cost benefits.

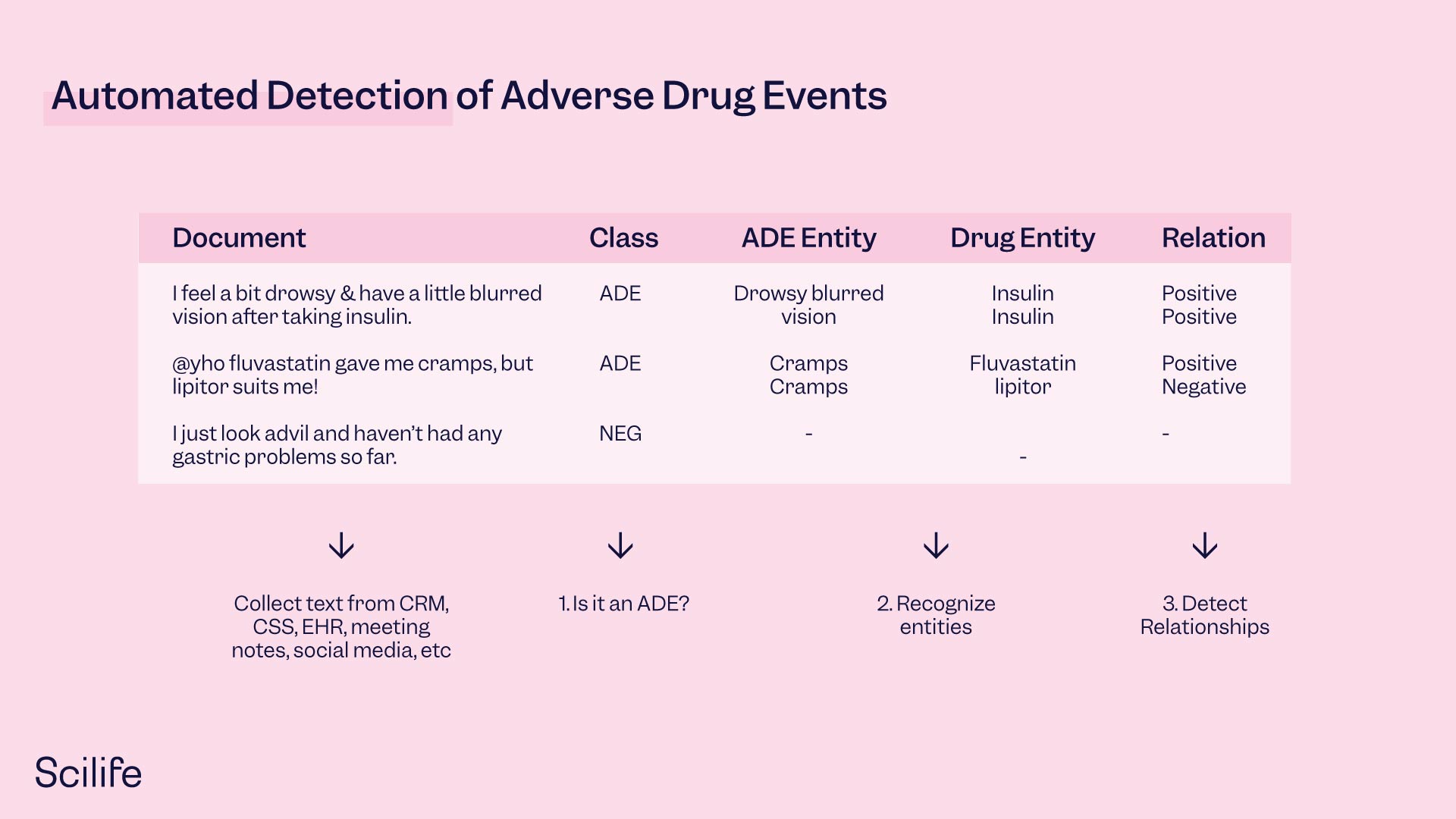

Adverse drug event (ADE) detection and reporting

Let’s discuss adverse event detection and pharmacovigilance. While all medicines and vaccines undergo rigorous testing for safety and efficacy in clinical trials, certain side effects may only emerge once these products are used by a larger, more diverse patient population (in the real world)—including people with other concurrent diseases.

Reporting adverse drug events is a challenge:

- 94% of ADEs are underreported.

- The average number of systems teams need to pull data to report ADEs is 3.2.

- Reporting teams don’t have enough time to report everything.

- Siloed systems inhibit data capture and reporting.

- Data may be shared through nontraditional channels (e.g., social media).

Automated ADE detection can help your organization strengthen its reporting. Pre-trained NLP models and pipelines can detect ADEs on scale, in many different languages, and ultimately increase the user’s reporting rate.ç

NLP trajectory and growth

Clearly, natural language processing is here to stay. The 2020 NLP Industry Survey shows increased NLP investment among global organizations.

- 53% of technical leaders indicated their NLP budget was at least 10% higher than 2019.

- 31% stated their NLP budget was at least 30% higher than 2019.

The 2021 NLP Industry Survey revealed an even greater NLP investment.

- 60% of technical leaders indicated their NLP budget was at least 10% higher than 2020.

- 33% stated their budget was at least 30% higher than 2020.

Meanwhile, Algorithmia’s annual survey 2021 Enterprise Trends in Machine Learning found that enterprises are expanding into a wider range of NLP applications, starting with process automation and customer experience.

- 43% of companies say their AI and ML (machine learning) initiatives “matter more than we thought,” with 25% saying AI and ML should have been their top priority sooner.

- 50% of enterprises plan to spend more on AI and ML this year, with 20% saying they will be significantly increasing their budgets.

- 56% of all organizations rank governance, security, and auditability issues as their highest-priority concerns today. In 38% of enterprises surveyed, data scientists spent more than 50% of their time on model deployment.

Enterprises began doubling down on their AI and ML spending in 2021, with 76% of organizations prioritizing AI and ML over other initiatives. Not only that, but the NLP market is predicted to be almost 14 times larger in 2025 than it was in 2017—increasing from $3 billion in 2017 to $43 billion in 2025.

This remarkable growth is poised to continue. The Life Sciences will undoubtedly continue to leverage (and benefit from) NLP moving forward.

Do you want to learn more about Natural Language Processing in Life Sciences?