The hype of artificial intelligence (AI) is revolutionizing industries such as pharmaceuticals and medical devices.

Regulatory agencies and organizations recognize the potential of AI in improving health outcomes. AI offers many possibilities by helping to improve processes, develop novel approaches, and transform data in ways that were not possible before.

Many organizations provide or use AI-based products or services, but are security, safety, trust, ethics, fairness, and transparency adequately addressed?

Not completely.

It is essential to address AI concerns to ensure ethical considerations, human rights, transparency, and accountability in AI development and deployment for health.

Organizations must be aware of their responsibilities and take the necessary steps to ensure that AI is used responsibly and safely. Governments must create and enforce these laws to ensure AI is used ethically and responsibly.

The responsible use of AI in pharma is being addressed by regulatory efforts. However, it has become increasingly difficult for regulatory agencies to keep up with AI technologies as they become more widely used.

This article reviews the upcoming regulation for pharma and medical devices that is intended to ensure patient safety and protect data privacy and security.

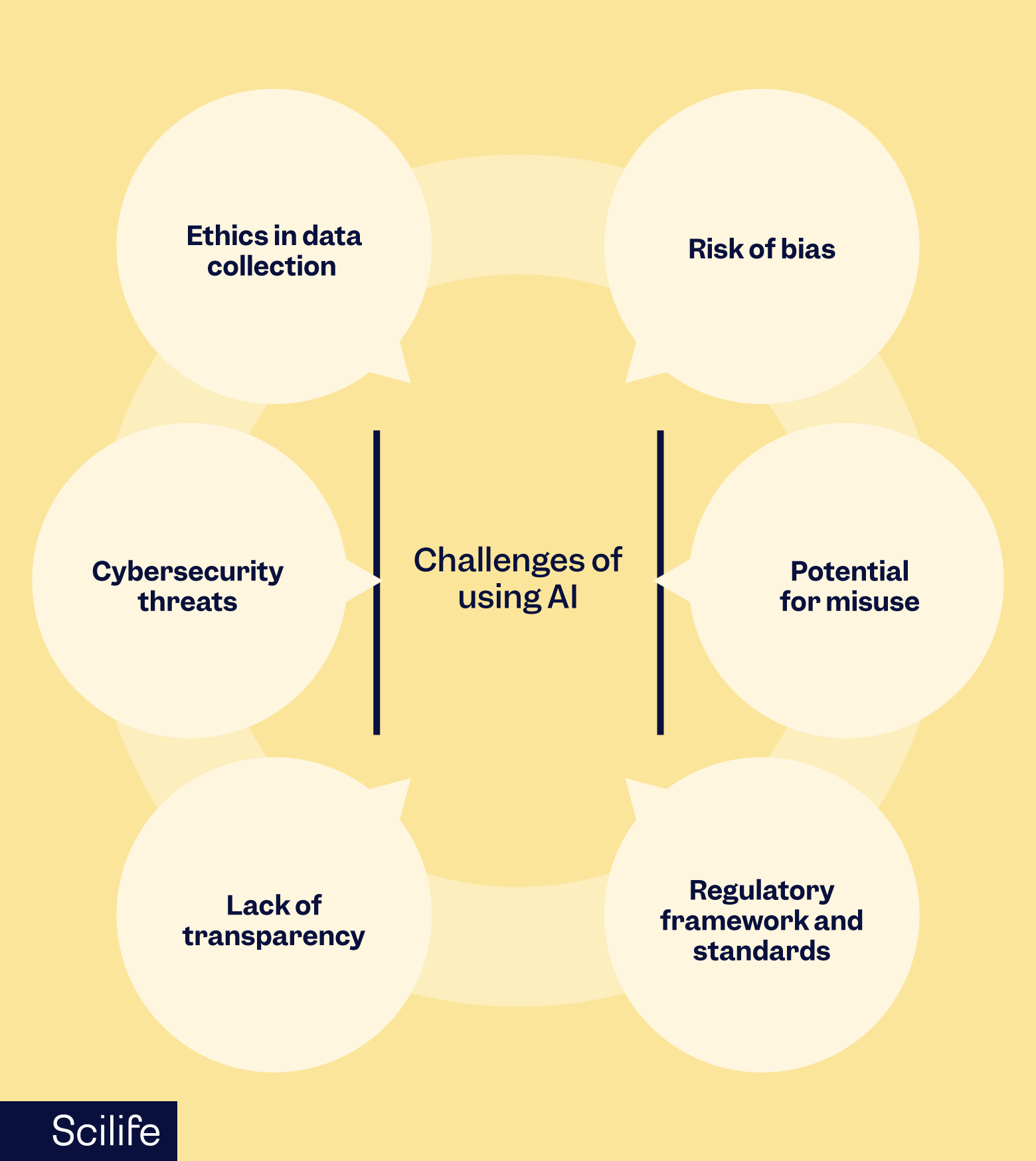

Serious challenges of using AI

To ensure that manufacturers' AI models comply with existing legislation, AI guidance must address serious challenges and caveats. These include:

Ethics in data collection

Using artificial intelligence to handle sensitive medical data raises privacy and security concerns. AI may also undermine individuals' autonomy and dehumanize patient care.

When automating personal data, manufacturers must comply with the General Data Protection Regulation (GDPR) in Europe and provide meaningful information about the logic involved in any automated decision taken by an AI algorithm.

The power granted to AI in the medical field could translate to life-threatening decisions, such as incorrect diagnosis, treatment, and intervention options, among many others.

The principle of autonomy requires that AI does not undermine human autonomy. This means that individuals must retain control over their medical decisions and actions in healthcare systems.

Cybersecurity threats

The integration of AI in pharma and medical devices raises concerns about the vulnerability of devices to cyberattacks. Privacy breaches can have serious financial and legal consequences.

Companies and healthcare providers must take steps to protect this data and ensure that it is secure. They must ensure that they have strong security measures in place and that their staff are properly trained to handle this data. They must also guarantee that patient information is kept secure and confidential.

Their systems must be regularly monitored and patched to make certain that any vulnerabilities are addressed quickly.

Lack of transparency

Many AI algorithms operate as black boxes, making it difficult to understand how they come to their conclusions.

In the absence of transparency, patients and healthcare professionals are left in the dark about trust, accountability, and the ability to explain medical decisions.

Risk of bias

The quality, distribution, and integrity of input data determine the output of AI algorithms. In this sense, it determines how well a device performs.

As a result, it is essential to identify and address any biases that may be present within the input data to ensure safe and effective AI applications.

Potential for misuse

Using medical AI tools incorrectly can lead to wrong medical assessments and decision-making, which could result in harm to patients.

Several factors can contribute to this misuse, such as the limited involvement of clinicians and patients in the development of AI, a lack of training in medical AI among healthcare professionals, and the proliferation of easily accessible online and mobile AI solutions without sufficient explanation and information.

Regulatory framework and standards

Advances in AI are outpacing regulations. To adapt existing regulations to AI innovations, regulatory authorities should develop clear guidelines and collaborate with stakeholders in the industry.

General initiatives in Artificial Intelligence

ISO/IEC 42001:2023

To ensure organizations stay ahead of the curve, the International Organization for Standardization (ISO) published ISO/IEC 42001:2023 Information technology — Artificial Intelligence — Management system (AIMS) in December 2023.

It is a standard that provides guidelines for establishing, implementing, maintaining, and continually improving a structured approach for Artificial Intelligence Management Systems (AIMS).

The ISO/IEC 42001:2023 standard is the first of its kind in the world. It takes into account the challenges AI poses, such as ethical considerations, transparency, and continuous learning. The standard sets out a structured way to manage risks and opportunities associated with using, developing, monitoring, and providing products or services that utilize AI, balancing innovation with governance.

WHO Regulatory Considerations on Artificial Intelligence for Health

In October 2023, the World Health Organisation (WHO) published a paper titled Regulatory Considerations on Artificial Intelligence for Health outlining key regulatory considerations for AI for health, emphasizing the importance of establishing safety and effectiveness, making systems available, and fostering dialogue about using AI as a positive tool.

A list of 18 regulatory considerations was provided in the document as a resource for regulators. These considerations fall under six broad categories:

- Documentation and transparency

- Risk management

- Intended use and validation

- Data quality

- Privacy and data protection

- Engagement and collaboration

The purpose of these categories is to guide governments and regulatory authorities in developing new guidance on AI or adapting existing guidance so that AI is effectively regulated to maximize its potential in healthcare while minimizing its risks.

AI regulations in pharma and medical devices

Artificial Intelligence in pharma consists of algorithms, models, and techniques that are incorporated into computer systems to automate tasks and make decisions and predictions.

Data analytics is one of the key AI applications in regulatory compliance. Pharma and medical device companies generate enormous volumes of data, which cannot be managed with traditional methods.

With the help of AI algorithms, a great deal of data can be more efficiently analyzed, patterns can be identified, and meaningful insights can be drawn from it.

In comparison to humans, AI-driven tools provide a faster and more accurate way of scanning and analyzing data.

However, the pharma industry's regulators acknowledge that AI presents challenges and opportunities that need to be addressed. Regulators are undertaking ongoing regulatory efforts to address responsible AI use.

Let’s discuss the current status of AI regulations in the EU and the US.

Current status in the EU

The EU has been working on implementing new AI regulations appropriate to the scope and use of the current and upcoming medical technology available in the market.

As part of the Big Data Workplan 2022-2025, the Big Data Steering Group of the European Medicines Agency (EMA) published a draft reflection paper on the use of AI in the medicinal product lifecycle in 2023.

Later that year, they published a workplan concerning the direction AI regulation is taking and how best to 'harness its capabilities'. The workplan clearly reflects the EU's unwavering commitment to allowing companies to maximize the benefit of AI while also ensuring its safety for the public.

A continuation of this is the 'AI Act", Europe's first AI law. It was proposed in 2021 and is being implemented from August 1st, 2024. The AI Act sets a standard for all artificial intelligence-related regulations to come after it.

With this initiative, the European medicines regulatory network remains at the forefront of utilizing AI in medicines regulation.

The Artificial Intelligence Act

The AI Act provides a uniform legal framework for the development, market availability, and use of AI in EU countries. In line with the MDR, the AI Act also emphasizes safety. It seeks to promote AI in healthcare and prevent barriers to its use.

The regulation clearly directs how AI tech should be managed, taking a "risk-based approach." It requires companies to implement market surveillance and risk management, maintain the quality of data, keep records, maintain transparency, and employ cybersecurity, among other steps.

According to the Act, AI tech that could threaten a person's safety and autonomy would be banned. Some Prohibited AI practices examples are:

- Intentionally manipulative or deceptive methods alter a person's behavior or decisions in a harmful way.

- The exploitation of vulnerable groups.

- Social scoring.

- Risk assessment and profiling of people.

- Facial recognition.

- Real-time remote biometric identification systems in public places.

Some exceptions exist; for example, real-time biometric identification could be allowed when security is involved. Other than that, the bans on prohibited AI use stand in all EU countries.

As the AI Act is enforced, organizations will have to audit their artificial intelligence systems to determine their risk category and ensure they meet the requirements. AI systems with the following characteristics are classified as high-risk.

- AI system that works as a safety component.

- AI systems are mentioned in Annex III of the regulation (it has a list of high-risk AI tech) unless they do not negatively affect a person's health, safety, or fundamental rights.

Annex III lists possible high-risk AI systems in several industries, including education, healthcare, administration, and law.

High-risk AI systems can still be placed in the market. However, manufacturing companies are expected to fulfill the following requirements.

- A well-planned risk management system will be executed.

- Total data governance, including all data training and testing, is subjected to appropriate data management practices.

- Technical documentation must be submitted before the AI product is placed on the market.

- Lifetime record keeping.

- Providers and deployers are required to maintain adequate transparency throughout the development and lifetime of the AI system.

- The AI system, although automatic, must be overseen by a person/s.

- The AI system should be accurate, robust, and properly protected against cyber threats.

Notes regarding the AI Act:

- Any research or tests conducted before an AI model or system is launched do not have to follow the regulations.

- The regulation also does not apply to AI models developed for personal use.

- Union law regarding "protection of personal data, privacy and the confidentiality of communications" applies to AI systems.

Who will implement the AI Act?

The member states will be responsible for enforcing the EU AI Act throughout the EU. They will create or designate a notifying authority who will oversee the setting up and notification authorities for assessment of the AI systems applying for certification. They will work in collaboration with conformity assessment bodies and with notified bodies of other EU states.

As the EU states will lay down similar requirements, standardization is needed. The European standardizing authorities will soon standardize requirements, documentation, and reporting processes.

The regulations, as a whole, will apply from August 2nd, 2026. However, since the risks associated with AI are pretty high, the prohibitions and general provisions of the AI Act will be applied from February 2nd, 2025. Obligations on providers of general-purpose AI systems will also be applied from August 2nd, 2025.

Fines for non-compliance will range from 7.5 million euros ($8 million) or 1% of global annual turnover up to 35 million euros or 7% of global annual turnover, depending on the severity of the violation and the size of the company involved.

Future changes to the EU AI Act are likely. Organizations will need to stay on top of these changes and be flexible in compliance. In addition to regularly reviewing AI systems against current regulations, organizations will need to maintain open channels with regulators and invest in AI governance training.

The Artificial Intelligence Act's key deadlines to look out for

In this case, 'enter into force' means that the Act will become part of the legal system of the EU on August 1, 2024, so after this date, the Act will become legally binding and applicable.

However, the date of August 1, 2024, does not constitute a deadline. You can find the deadlines in the timeline below.

Current status in the US

At the time of this post, no comprehensive AI law has been adopted in the US. However, the US regulatory authorities have already issued some guidelines and frameworks for using AI in the medical field.

Let’s discuss what is the current situation in the medical device sector:

In January 2019, the FDA published the Developing a software pre-certification program: A working model that provides a more streamlined and efficient regulatory oversight of software-based medical devices from manufacturers who have demonstrated a robust culture of quality and excellence.

In April 2019, the FDA published a discussion paper, “Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) - Discussion Paper and Request for Feedback” that describes the FDA’s approach to premarket review for artificial intelligence and machine learning-driven software modifications. Based on these recommendations, SaMDs can be developed, evaluated, and validated using real-world performance monitoring, algorithm change protocols, and quality assurance measures.

In January 2021, the FDA published the Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan, with the FDA’s commitment to supporting innovative work in the regulation of medical device software and other technologies, such as AI/ML. In this plan, the FDA outlines five goals:

- Tailored regulatory framework for AI/ML-based SaMD

- Good Machine Learning Practice (GMLP)

- A patient-centered approach incorporating transparency for users

- Regulatory science methods related to algorithm bias & robustness

- Real-World Performance (RWP)

This Action Plan indicates the FDA's intentions to update the proposed regulatory framework presented in the discussion paper on AI/ML-based SaMD, including through the issuance of a draft guidance.

Let’s discuss what is the current situation of artificial intelligence in pharma and biotech:

In 2023, the FDA released two discussion papers on AI use:

- The first paper, Artificial Intelligence and Machine Learning in the Development of Drug & Biological Products aims to stimulate discussion between interested parties on the potential use of AI/ML in drug and biological development, as well as devices intended to be used in combination with drugs or biologics. It also discusses the possible concerns and risks associated with AI.

- The second paper from the FDA, Artificial Intelligence in Drug Manufacturing, seeks public feedback on regulatory requirements applicable to the approval of drugs manufactured using AI technologies. This paper is part of the CDER’s Framework for Regulatory Advanced Manufacturing Evaluation Initiative to support the adoption of advanced manufacturing technologies: continuous manufacturing, distributed manufacturing, and AI.

It is expected that the FDA will consider such input when developing future regulations.

Regarding the US administration, in October 2023, they issued an AI Executive Order that directed many U.S. executive departments and agencies to adhere to eight principles and evaluate the safety and security of AI technology and other associated risks. In this order, organizations were required to test and report on their AI systems.

A great deal of emphasis should also be placed on the Cybersecurity Modernization Action Plan released to enhance the FDA's ability to protect sensitive information and improve cybersecurity capabilities. The FDA is committed to ensuring that drugs are safe and effective while facilitating innovations, such as AI, in their development.

Upcoming regulations in the US

No specific regulatory guidance is applicable yet. However, the FDA has invited the public to comment on the discussion papers that have already been published. They are also engaging with stakeholders to improve their understanding of the implications of AI-based technologies on the development of drugs and medical devices.

The agency plans to finalize its regulatory framework in the near future.

Conclusion

The integration of artificial intelligence in the pharmaceutical and medical devices sectors presents unprecedented opportunities but demands careful consideration of ethical, security, and transparency concerns.

Regulations such as the EU's AI Act and US efforts, are crucial to balancing innovation with governance. The landscape is evolving rapidly, which calls for proactive collaboration, compliance auditing, and continuous learning.

The transformative potential of AI in healthcare is imminent, requiring a shared commitment to responsible adoption for the benefit of individuals and global well-being.